New Instagram safety tool will stop children receiving nude pictures | Science & Tech News

The owner of Facebook, WhatsApp and Instagram is going to introduce a new safety tool to stop children from receiving nude pictures – and discourage sending them.

It follows months of criticism from police chiefs and children’s charities over Meta’s decision to encrypt chats on its Messenger app by default.

The company argues that encryption protects privacy, but critics have said it makes it harder for the company to detect child abuse.

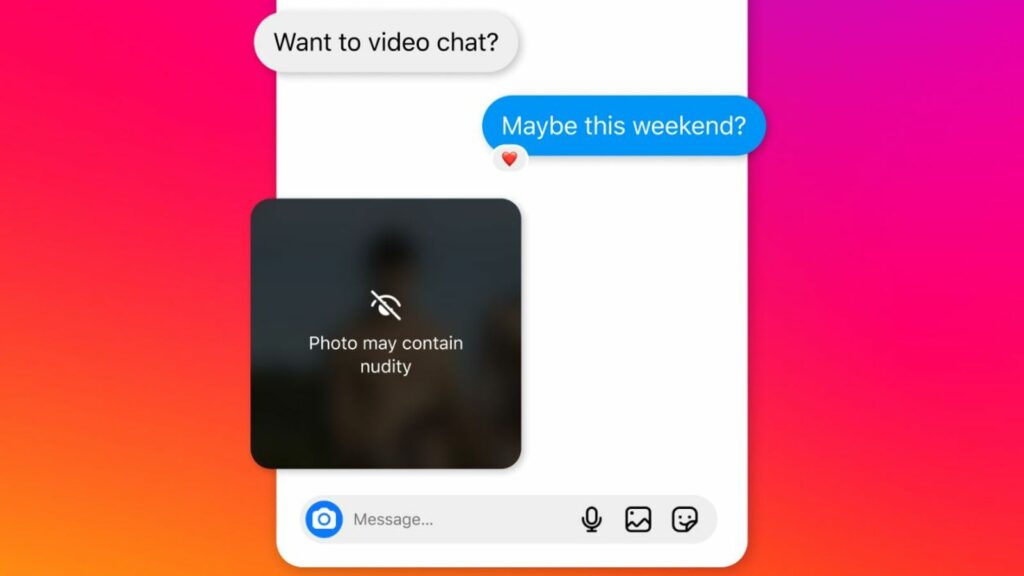

Pic: Meta

The new nudity protection feature for Instagram direct messages will blur images detected as containing nudity, and prompt users to think before sending nude images.

It will be turned on by default for users who say they are under 18, and Instagram will show a notification to adult users to encourage them to turn it on.

Meta’s understanding of a user’s age is based on how old someone says they are when they sign up, so it is not verified by the platform.

Adults are restricted from starting private conversations with users who say they are under 18 – but under the new measures coming in later this year, Meta won’t show the “message” button on a teenager’s profile to potential “sextortion” accounts, even if they’re already connected.

Sextortion is when children are blackmailed with the threat of compromising images being sent to family or released on social media unless money is paid.

“We’re also testing hiding teens from these accounts in people’s follower, following and like lists, and making it harder for them to find teen accounts in search results,” representatives said in a blog post published on Thursday.

Read more tech news:

AI ‘helping Meta fight disinformation’

Where and when to see next solar eclipse

Please use Chrome browser for a more accessible video player

1:42

Nov: ‘Hell’ of my intimate images online

Earlier this year, police chiefs said that children sending nude images had contributed to a rise in the number of sexual offences committed by children in England and Wales. It is considered a crime to take, make, share or distribute an indecent image of a child who is under 18.

Rani Govender, senior policy officer at the NSPCC, said the new measures are “entirely insufficient” to protect children from harm – and Meta must go much further to tackle child abuse on its platforms.

Keep up with all the latest news from the UK and around the world by following Sky News

Tap here

“More than 33,000 child sexual abuse image crimes were recorded by UK police last year, with over a quarter taking place through Meta’s services,” she said.

“Meta has long argued that disrupting child sexual abuse in end-to-end encrypted environments would weaken privacy but these measures show that a balance between safety and privacy can be found.”

Ms Govender added: “A real sign of their commitment to protecting children would be to pause end-to-end encryption until they can share with Ofcom and the public their plans to use technology to combat the prolific child sexual abuse taking place on their platforms.”

Susie Hargreaves, chief executive of the Internet Watch Foundation, said the charity applauded any efforts by tech companies to safeguard the children using their social media platforms.

She added: “However, while the new tool is a welcome move by Meta, any potential benefits will be undermined by its decision to roll out end-to-end encryption on its messaging channels.

“By doing so, it is willfully turning a blind eye to child sexual abuse.

“More can, and should be done, to protect the millions of children who use Meta’s services.”

Recent Comments